Gamification improves the quality of student peer code review

Authors: Theresia Devi Indriasari, Paul Denny, Danielle Lottridge, Andrew Luxton-Reilly

Date: 2023-07-03

Background and Context: Peer code review activities provide welldocumented benefits to students in programming courses, whether as authors or reviewers of code. Students develop relevant skills through the exposure to alternative coding solutions, producing and receiving feedback, and collaboration with peers. Despite these benefits, low student motivation has been identified as one of the challenges leading to poor engagement and substandard review quality.

- (1) RQ1: How does gamification impact the quality of written feedback? (2) RQ2: How do students perceive the value of feedback produced and received? (@indriasari2023, 2)

- Gamification in student peer code review has therefore been shown to influence student perceptions, and the quantity of comments and reviews submitted, but we know little about the quality of feedback produced under gamification conditions. The quality of reviews is crucial as we do not want student to sacrifice quality for quantity. Therefore, we investigate the impact of gamification on the quality of student code reviews. (@indriasari2023, 3)

- In this work, we use {Anonymized}, a web-based tool that facilitates peer code review activities in programming courses. The tool supports all of the core functions of a peer review activity (Hamer, Kell, & Spence, 2007; Indriasari et al., 2020a), enabling students to upload their source code for a programming assignment, anonymously see and review code produced by their peers that is randomly assigned to them, and view and rate feedback they receive on their own work. (@indriasari2023, 3)

- What's the dang tool?!

- When reviewing a code submission using {Anonymized}, the reviewer provides both numerical scores and open-response written feedback. (@indriasari2023, 4)

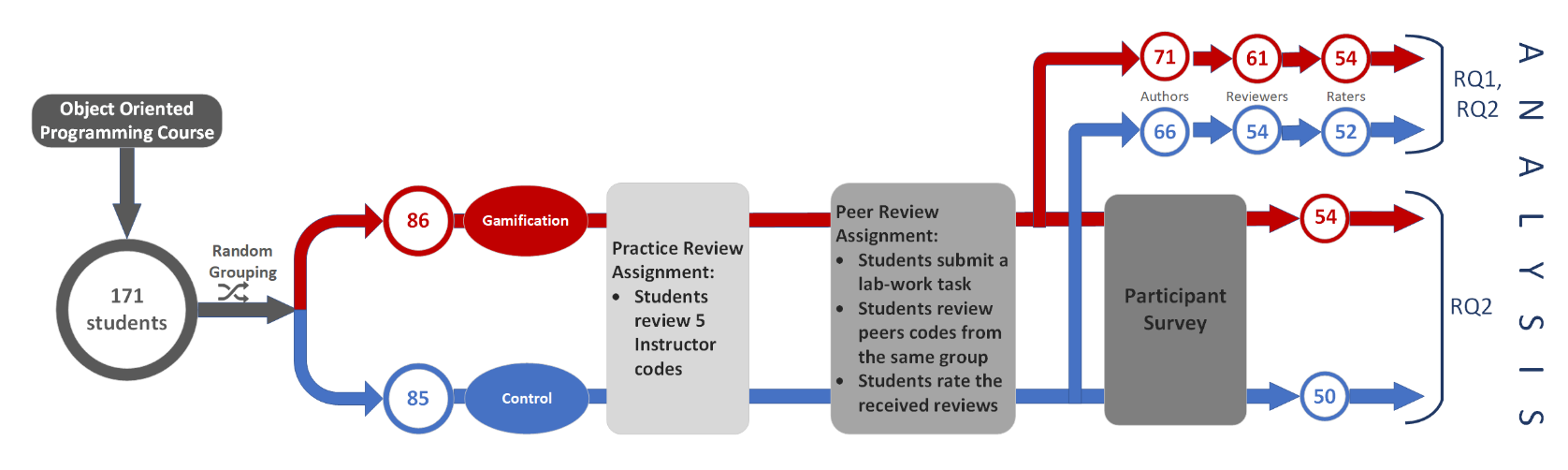

- Figure 2. An Overview of the experimental study and participant counts. Activities are shown in different colour of grey box and participants counts are shown in red circle for gamification condition and blue circle for the control condition.

Definitely quantitative.

- Figure 2. An Overview of the experimental study and participant counts. Activities are shown in different colour of grey box and participants counts are shown in red circle for gamification condition and blue circle for the control condition.

- We used criteria derived from Stegeman, Barendsen, and Smetsers (2014); Stegeman et al. (2016) such as Variable Names, Expression, and Control Flow. We combined similar criteria into a single criterion to simplify and reduce the number of criteria used. The Inline Comments and Header Comments were merged into a single criterion called Comments, and Layout and Formatting were incorporated into a single Layout criterion, and finally Decomposition and Modularisation criteria were combined into Decomposition criterion. Overall, we used six criteria to assess the code quality in the practice review and peer review assignment. (@indriasari2023, 7)

- Rubric criteria conceptual framework.

- During the last week of the course, students in both groups were invited to participate in a survey. The survey questions asked students about their perception of the feedback they provided, and the feedback they received during the peer code review activities. The survey included two Likert-scale questions and an open-ended question, as follows: • SQ1. “Overall, I found that the feedback that I received was useful to me.” • SQ2. “Overall, I think the feedback that I provided would be useful for my peers.” • SQ3. “Please comment on the quality of feedback you read during peer code review.” (@indriasari2023, 9)

- Survey to measure student perception of feedback.

- The two main categories were general and specific comments (Hamer et al., 2015). The general comments were high-level and did not target particular elements of the code. Specific comments focused on some aspects of the code or criteria of code assessment. These two categories were further divided into negative, positive, neutral, and advice/action (Hamer et al., 2015). A comment was classified as negative if it highlighted an aspect of the code that was inadequate, and positive if it highlighted something that was completed well. In contrast, comments that were not obviously positive or negative in tone were classified as neutral. Advice/action comments provided actionable suggestions for making modification or improvement to the programming code. We also used the personal voice and off-topic categories described by Hamer et al. (2015). Comments were coded as personal voice if they included personal features such as emoticon, encouragement, or directing a comment towards the author of the code rather than focusing on the code. Off-topic comments were unrelated to the project. (@indriasari2023, 9)

- Tool to classify quality of comments from students.

- Despite these benefits, low student motivation has been identified as one of the challenges leading to poor engagement and substandard review quality. (@indriasari2023, 1)

- Motivation

- We observe that a significantly greater proportion of students in the gamification group submitted more reviews than required (n > 5) (24.59%) compared to the control condition (9.26%) (χ2 (2, n = 112)= 5.255, p =.022). (@indriasari2023, 12)

- More students submitted reviews in the gamification group than the control group.

- Thus, the results support the hypothesis that the use of gamification will result in more longer reviews. (@indriasari2023, 12)

- students in the gamification group wrote significantly longer comments in all of the comment boxes compared to students in the control group. (@indriasari2023, 17)

- students in the gamification group tended to write feedback that focused on a specific aspect of the code or code assessment criteria rather than providing a more general comment. (@indriasari2023, 17)

- Students in the gamification condition also generated a greater quantity of specific advice, general advice and compliments when reviewing the work of their peers. These comments included more actionable suggestions for improvement of the work under review. (@indriasari2023, 17)

- Overall, students from both groups thought that the feedback was useful, and many emphasised the importance of receiving high-quality feedback. For example, after reading the feedback, some students discovered flaws in their code and others reported gaining new knowledge as a result of the feedback they received. Students also indicated the feedback would be useful in helping them to produce better code in the future. (@indriasari2023, 18)

- Although feedback is essential for effective student learning (Hattie & Timperley, 2007), students with poor motivation may not produce high quality feedback during the peer code review process (Indriasari et al., 2020b). (@indriasari2023, 2)

- Motivation

- To make it easier for people to read your program code, you should use meaningful variable names, and don’t use abbreviated names consisting of only 1 or 2 letters. (@indriasari2023, 11)

- I wouldn't exactly call this "specific advice" but I understand what they mean nonetheless.

- In the current study, the game elements we implemented were very simple and did not explicitly guide students to focus on certain aspects of their reviews (such as providing actionable feedback on specific elements of the code submitted, which is what we would like to observe in student feedback). Despite this, we still observed positive effects on the nature of the review feedback. Exploring more sophisticated game element designs with mechanics that are better targeted to influence review quality would be another useful avenue to explore in the future. (@indriasari2023, 18)

- This is it. I can pick up where they left off! I can try to implement a game that has more sophisticated game mechanics that also employs more meaningful gamification rather than just rewards-based.

Indriasari, T. D., Denny, P., Lottridge, D., & Luxton-Reilly, A. (2023). Gamification improves the quality of student peer code review. Computer Science Education, 33(3), 458–482. https://doi.org/10.1080/08993408.2022.2124094